maths Markov chains are a way to model a system with a sequence of states. The current state can transition to another state according to some probability. The probability of being in a certain state only depends on the previous state. This is represented by the following equation:

Example:

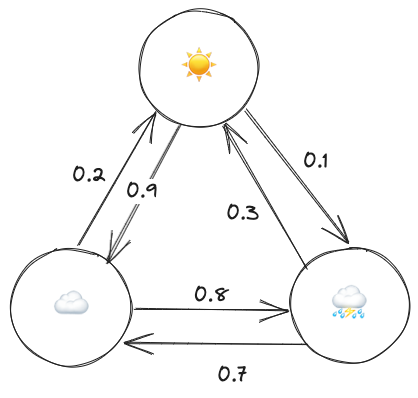

Let’s try to model the weather with a Markov chain. Our system can be in three different states: sunny, cloudy and rainy days.

Each circle represents a state, and each arrow a transition between states with the number on top of it representing the probability that we transition to a state. Note that the transition probability out of a state always sums to 1. Another important thing to note is that the current state only depends on the previous state. For example let’s say we have the following sequence of days ☀️,☁️, ☀️,☁️, the probability of transitioning to ⛈ only depends on the previous state, that is ☁️. We can thus write:

Each circle represents a state, and each arrow a transition between states with the number on top of it representing the probability that we transition to a state. Note that the transition probability out of a state always sums to 1. Another important thing to note is that the current state only depends on the previous state. For example let’s say we have the following sequence of days ☀️,☁️, ☀️,☁️, the probability of transitioning to ⛈ only depends on the previous state, that is ☁️. We can thus write:

(Sorry for the inconsistent emojis, blame obsidian)

We can write out all the probabilities in a single matrix. We call this the transition matrix:

The stationary distribution of a Markov chain is the probability associated with each state that remains unchanged as time progresses. It satisfies:

In other words is invariant to .

A cool thing to note, is that is the eigenvector associated with the transformation matrix , with the eigenvalue set to 1:

References: