maths The Baum-Welch algorithm helps us find the parameters of a Hidden Markov Model given a sequence of observation.

Given observations and parameters we can find such that using the Baum-Walch algorithm. It only guarantees a local minimum.

Let’s call the probability of being in state at time then state at time given observation and parameters . We can write it as such:

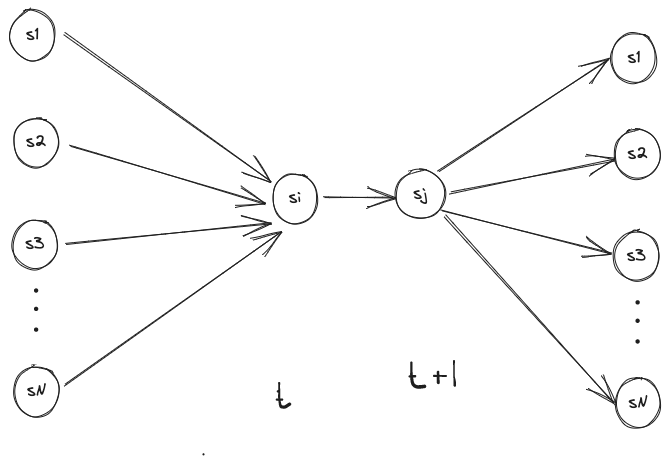

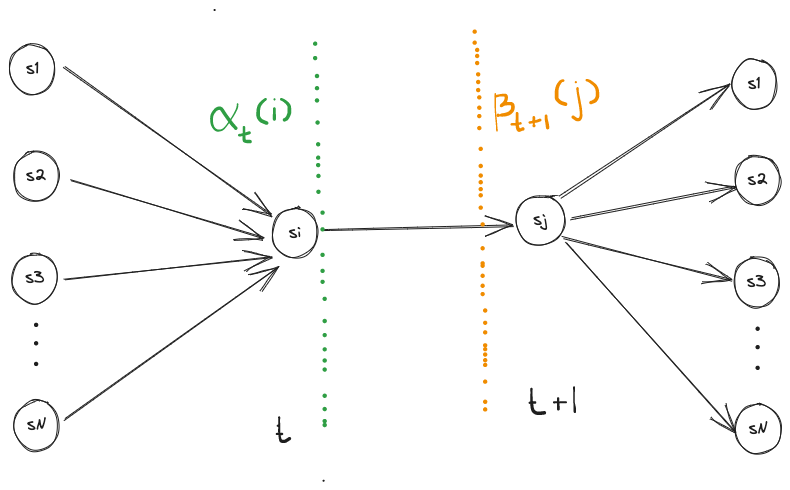

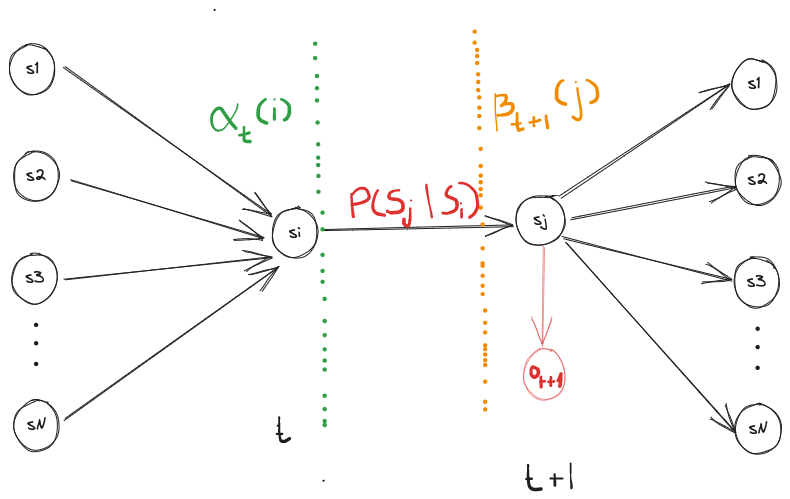

We can illustrate is as:

In the forward algorithm we computed which captures the probability that we are in state i given the observations that came before it. In the backward algorithm we computed which captures the probability that we will be in the given state knowing what’s going to come in the future.

We have :

The likelihood that we end up in state at time given all our observation up until this point is captured by . The likelihood that we end up in state at time knowing all our observation after this point is captured by .

Now that we know the probability of being in state and , we just need to tie them together by multiplying it by the transition probability from state to , and the probability of being in state given our observation at time :

We can rewrite as:

Note that we divide by to normalize our likelihood because we are looking for probabilities.

We can write the probability of being in state at time as:

Now we have:

and

Now we can update our parameters.

To update our stationary distribution:

To update our transition matrix:

To update our emission probabilities:

*Note means such that observation at time is , we only sum terms that meets this condition.

Further Readings: